High Availability of Replicated Data Queue Manager

Introduction

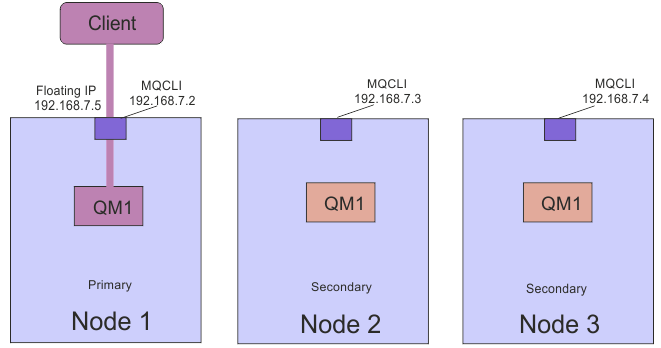

RDQM (replicated data queue manager) is a high availability way out that is existing on Linux platforms. RDQM has three servers configured in a high availability group. Each node has an instance of the Queue Manager. One instance is the running queue manager (primary node), which synchronously replicates its data to the other two instances (secondary nodes). If the server running this queue manager is unsuccessful, a different instance of the queue manager starts with the current data to operate. RDQM is supported on RHEL v7 x86-64 only.

In a two-node High Availability system, a split-brain situation can occur when the connectivity between the two nodes breaks. RDQM uses a three-node system with a minimum number to avoid the split-brain condition. Nodes that can communicate with at least one of the other nodes form a quorum. Queue managers can only run on a node that has a minimum number.

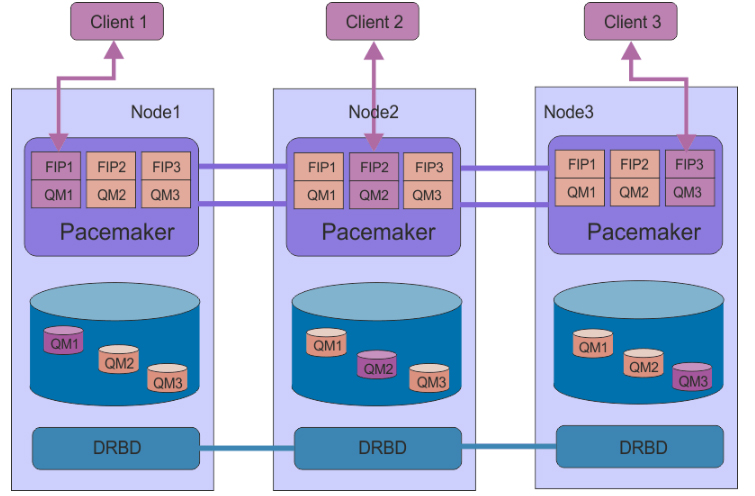

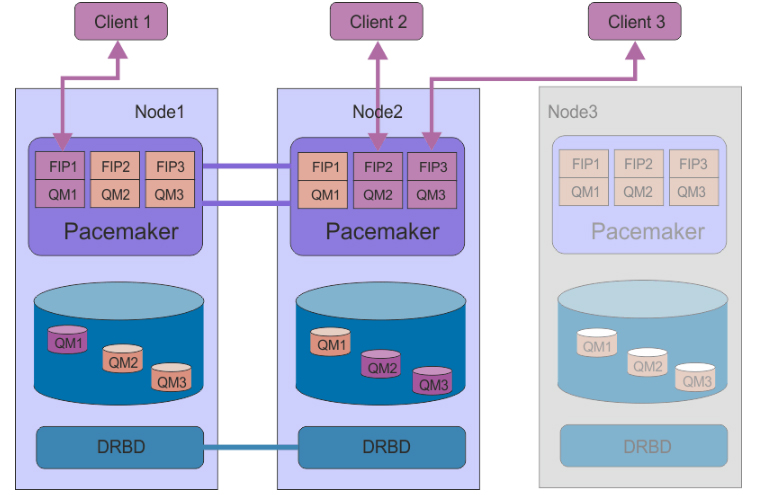

Example of HA group with three RDQMs

Node3 has failed, the Pacemaker links have been lost, and queue manager QM3 runs on Node2 instead of Node3.

Requirements of RDQM Setup

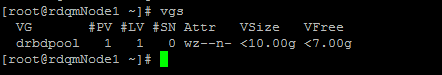

Install pre-requisite packages for pacemaker cluster. Each node requires a volume group called, drbdpool.

Add Physical Interface/Network Adapter to add the floating IP address.

Installation of RDQM Support and Firewall Configuration

Install MQ packages on each node and Move to

MQSoftwareLocation/MQServer/Advanced/RDQM directory and run installRDQMsupport script to install RDQM, DRBD and Pacemaker RPM packages

[root@rdqmNode1 RDQM]# ./installRDQMsupport

The firewall between the nodes in the HA group must allow traffic between the nodes on a range of ports. Run the script MQ_INSTALLATION_PATH/samp/rdqm/firewalld/configure.sh on each node to configure the firewall

[root@rdqmNode1 firewalld]# ./configure.sh

Defining the Pacemaker cluster (HA group)

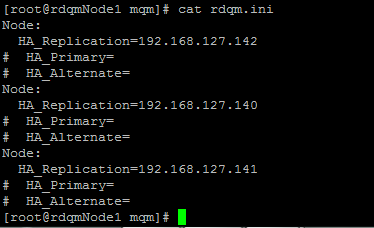

To define the pacemaker cluster, edit the /var/mqm/rdqm.ini file on each node with IP addresses of three servers, so that the file defines the cluster.

Run the below command on each node to configure the replicated data subsystem.

rdqmadm –c

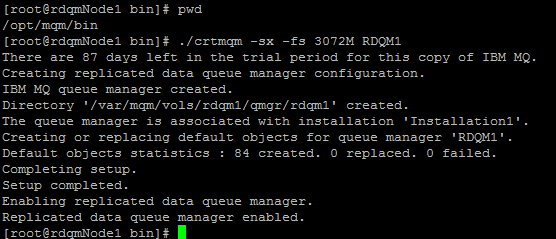

Creating Replicated Data Queue Manager as a root user

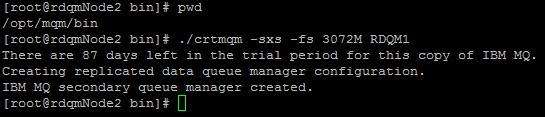

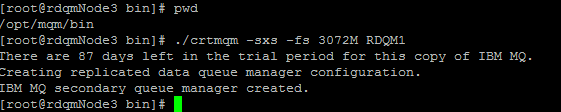

Enter the below command on secondary nodes (rdqmNode2 and rdqmNode3) to create secondary queue manager RDQM1

crtmqm –sxs –fs sizeOfFilesystem QmgrName

./crtmqm –sxs –fs 3072M RDQM1

On primary node (rdqmNode1) run the below command to create primary queue manager RDQM1

crtmqm –sx –fs sizeOfFilesystem QmgrName

./crtmqm –sx –fs 3072M RDQM1

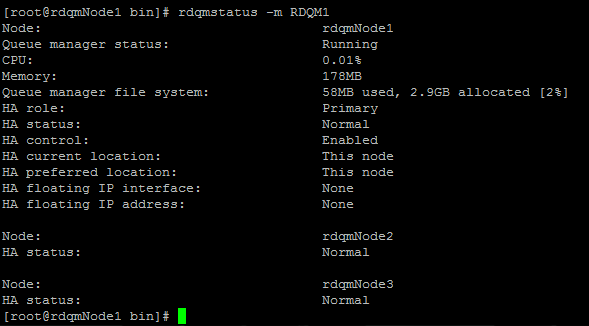

Status of RDQM

Command to get the status of rdqm is

rdqmstatus –m qmgrName

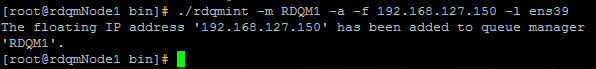

Adding Floating IP Address

Add Network adapter/ Physical Interface on each node. The floating IP address must belong to the same subnet as the physical interfaces on the three nodes, say physical Interfaces on each node with IP addresses as 192.168.127.144, 192.168.127.145, 192.168.127.146

Adding floating IP address 192.168.127.150(same subnet as 3 nodes) on primary node

rdqmint -m qmgrName -a -f ipv4address -l interfaceName

./rdqmint -m RDQM1 -a -f 192.168.127.150 -l ens39

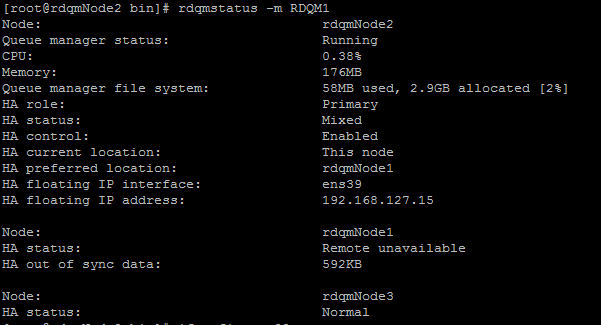

Single Node Failure Situation

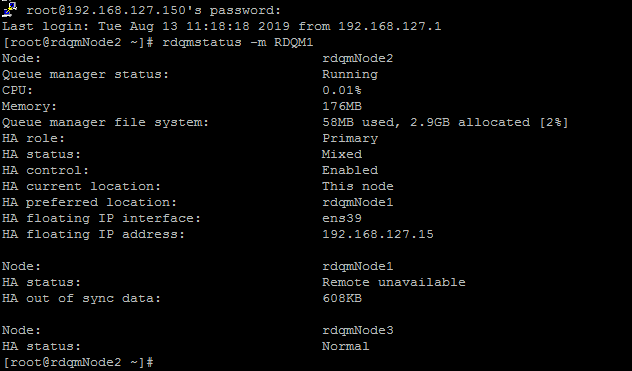

When rdqmNode1 is down, qmgr is running on rdqmNode2

The floating IP address (192.168.127.150) is now bound to interface on rdqmNode2

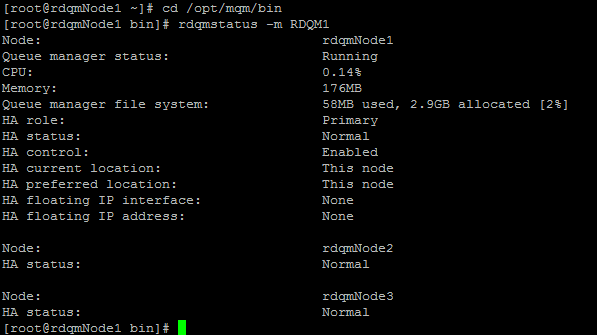

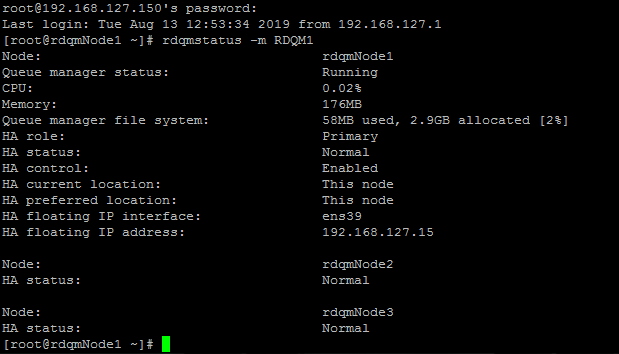

When rdqmNode1 is up, queue manager is now running on rdqmNode1

And floating IP address will bound to the interface on rdqmNode1

RDQM DR (Disaster Recovery)

RDQM DR has two nodes, primary and secondary. A primary instance of a DR queue manager will be running on a single server, and another server acts as a recovery node when the secondary instance of the queue manager runs. Data is duplicated between the queue manager instances. Replication of data between primary and secondary queue managers can be synchronous or asynchronous. If you miss the primary queue manager, you can manually make the secondary instance into the primary instance and start the queue manager, then resume work from the same place.

Conclusion

High availability is the ability of a system to remain continuously operational for a suitably over a long period of time, even in the event of some component failures. Royal Cyber’s MQ experts have expertise in scheming for continuous availability which typically has no data loss. Watch this video for more information on replicated data queue manager. You can also email us at info@royalcyber.com or visit www.royalcyber.com.

1 Comment

Am getting an error like unsupported kernel release and am using cento os with 7.9 release